Adaptive Policy Regularization for Offline-to-Online Reinforcement Learning in HVAC Control

Published in NeurIPS CCAI & ACM BuildSys'24, 2024

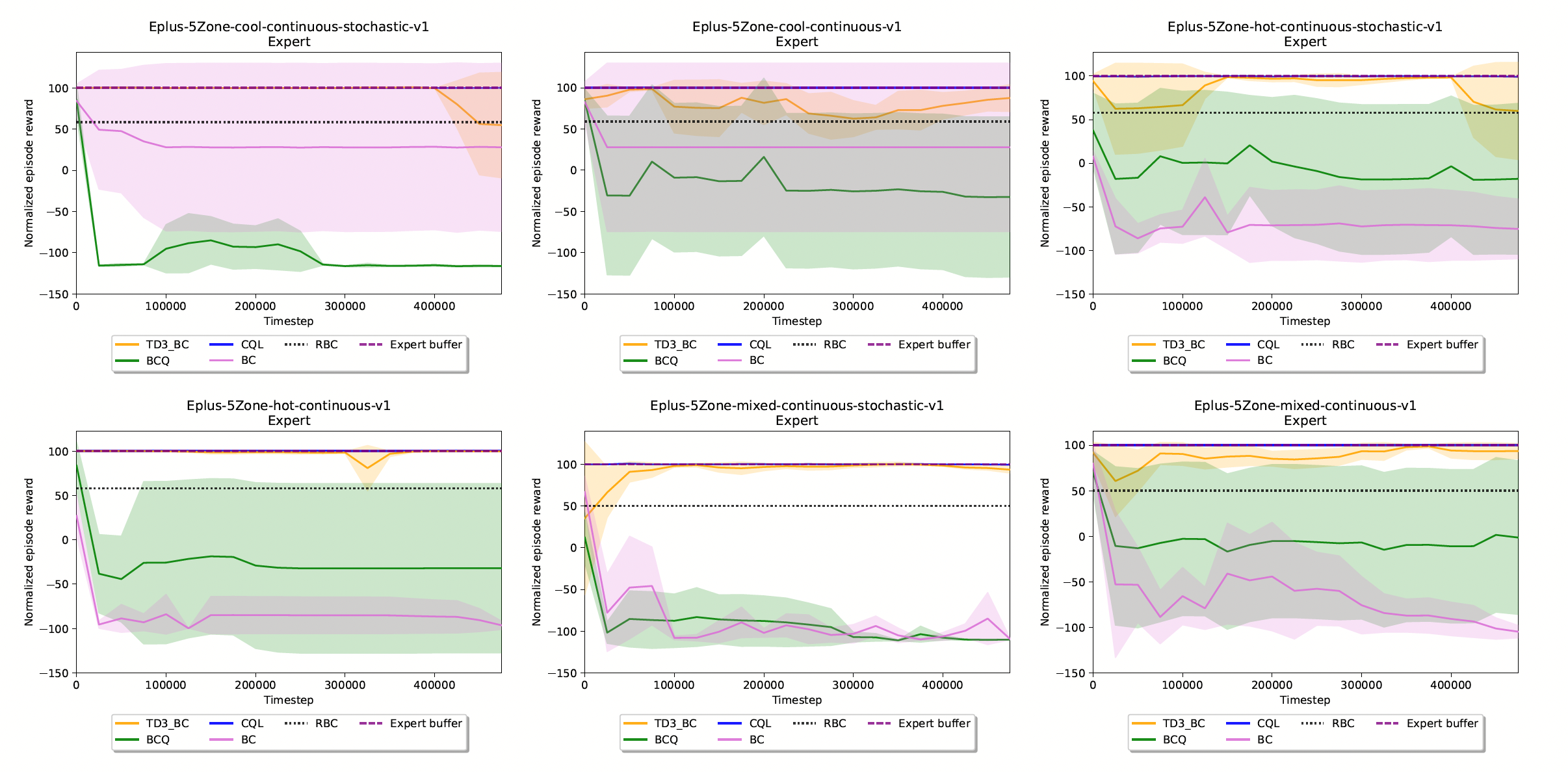

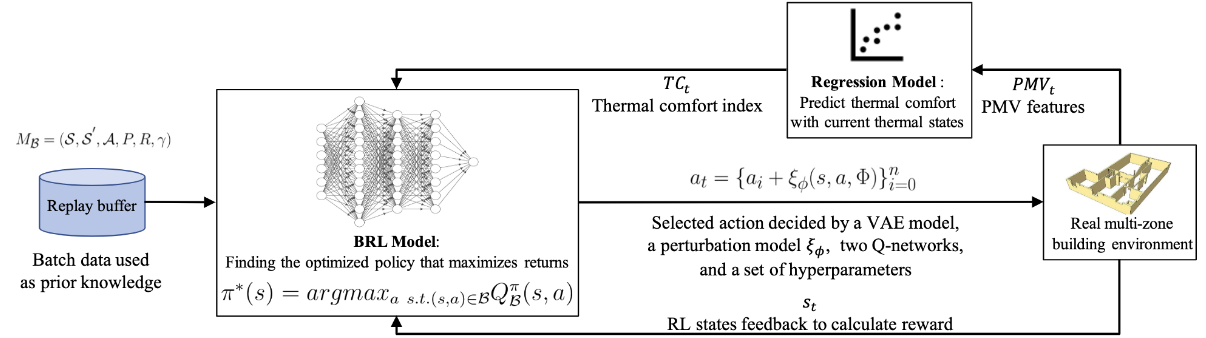

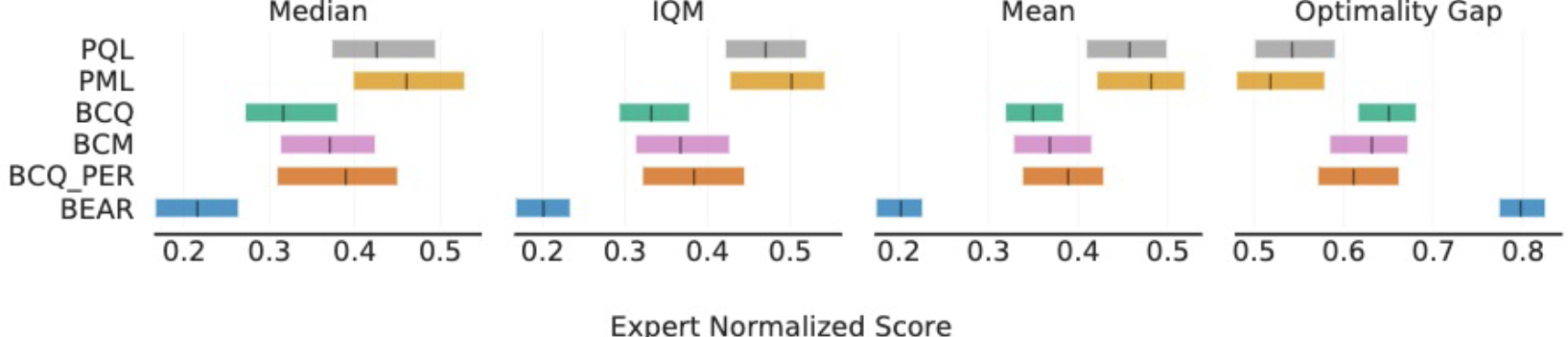

This paper proposes an adaptive policy regularization approach for transferring policies from offline datasets to online deployment in HVAC control. The method uses weighted increased simple moving average Q-value estimators to stabilize policy updates and improve safety during online fine-tuning.

Recommended citation: Liu, Hsin-Yu. Adaptive Policy Regularization for Offline-to-Online Reinforcement Learning in HVAC Control. NeurIPS CCAI & ACM BuildSys'24. Nov. 2024. https://dl.acm.org/doi/pdf/10.1145/3671127.3698163